This integration enables you to synchronize data from Databricks to Insider One seamlessly. The process involves authenticating with Databricks, selecting relevant data tables, mapping data fields, and configuring synchronization settings.

Essentials before Integration

Before you begin, ensure the following prerequisites are met to enable a smooth and secure data import from Databricks to Insider One.

You must have a Databricks account with account admin privileges to set up the integration.

Ensure you have access to the catalog, schema, and tables that contain user and event data you want to sync with Insider One.

Insider One Databricks connector supports AWS, Azure, and Google Cloud platforms.

Step 1: Create Databricks Source Integration

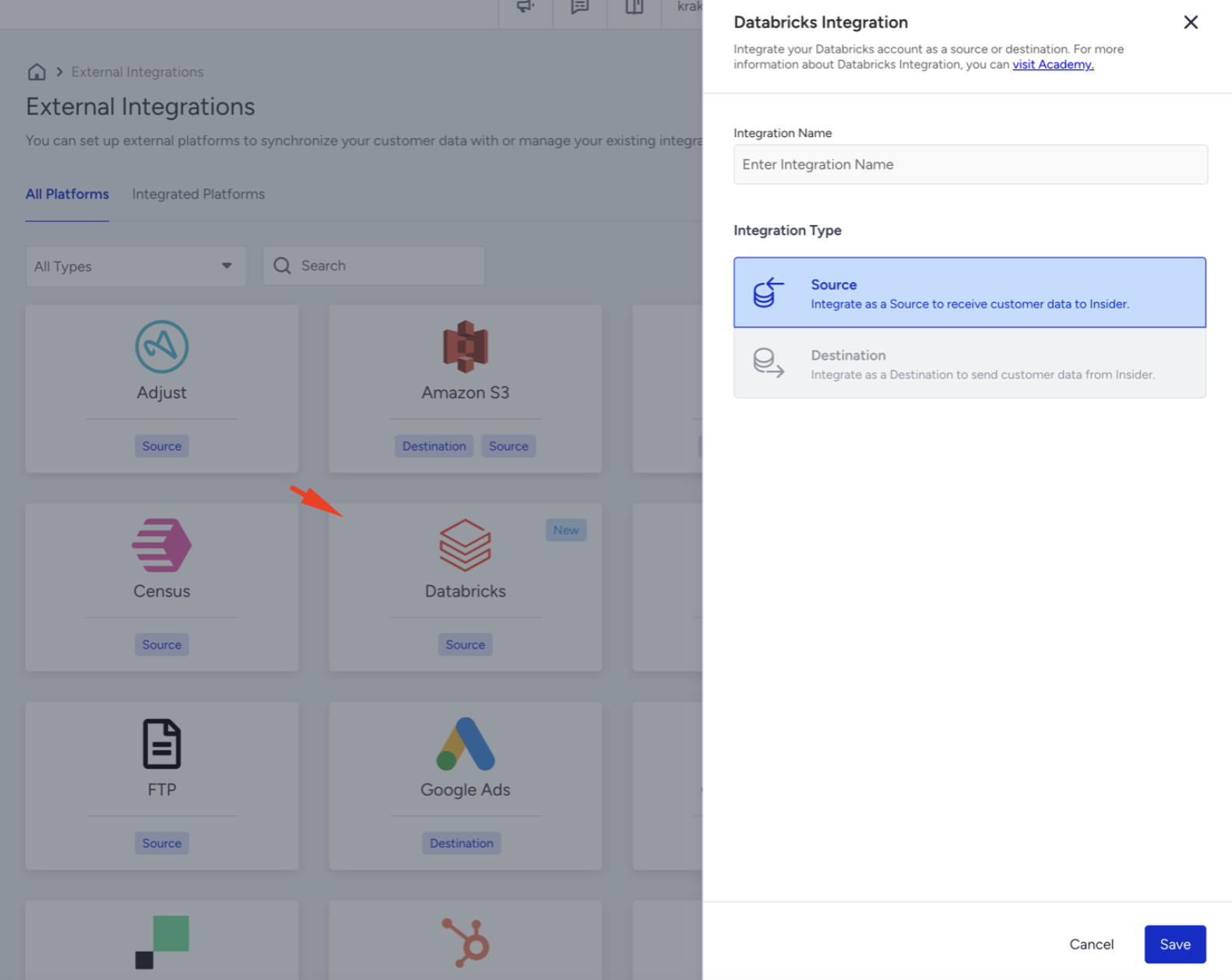

Navigate to Components > Integrations > External Integrations on the Insider One’s InOne panel.

Select the Databricks integration and create a Databricks Source Integration.

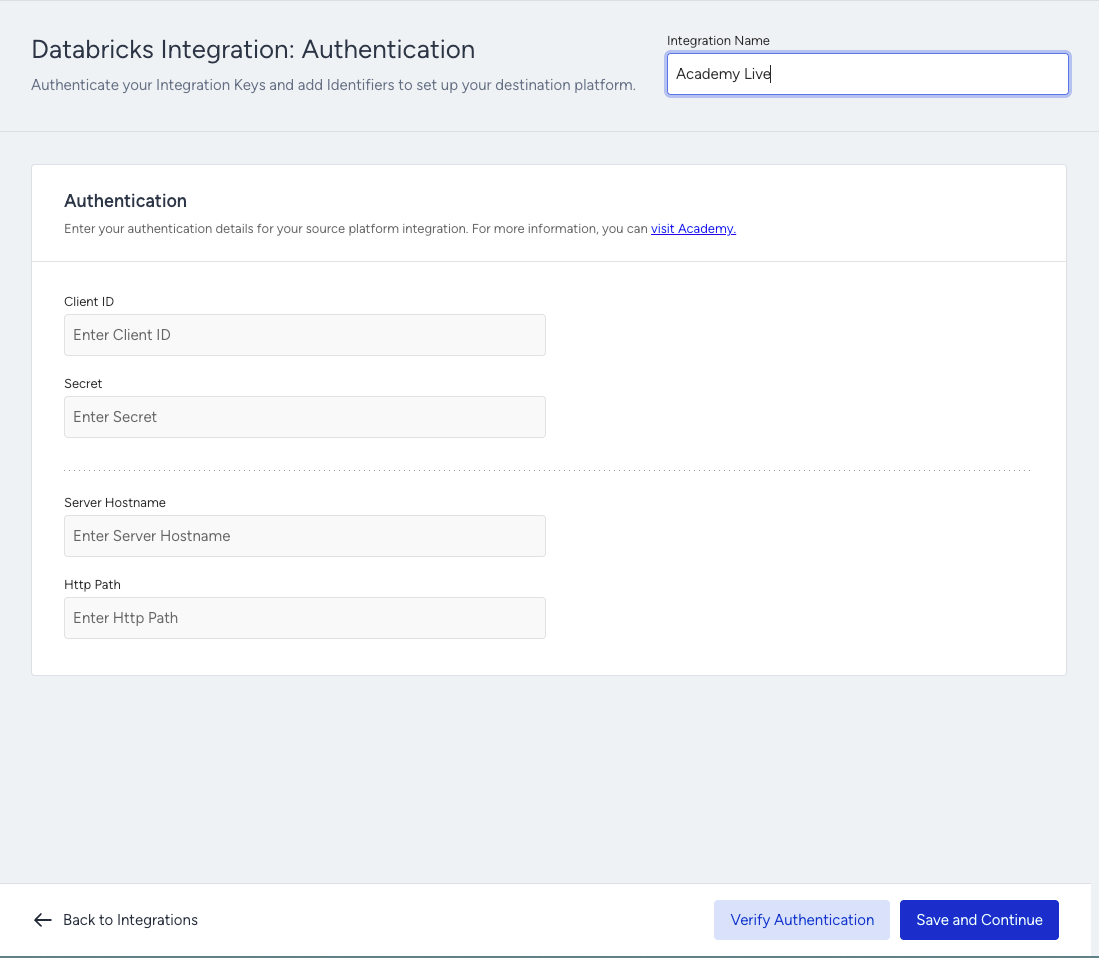

Enter your credentials on the Authentication page. You should fill in the following required fields:

Client ID

Secret

Server Hostname

HTTP Path

Click Verify Authentication and confirm your authentication before saving and continuing.

If you encounter any error messages, please check your integration settings and correct the errors before continuing

Step 2: Configure a Service Principal with OAuth

To connect Insider One with Databricks using OAuth, you’ll need to configure a Service Principal in your Databricks workspace.

Step 2.1: Create a Service Principal

In your Databricks workspace, click your user profile on the top right and select Settings.

Go to Identity and access > Service Principals and click Manage.

Select Add new, enter a name, and click Add.

Create an OAuth Secret Pair

On the Service Principal page, go to the Secrets tab.

Click Generate secret.

Copy the Secret and Client ID shown in the dialog.

Secret

Client ID (UUID format, e.g.,

63fc7e90-a8a2-4639-afd8-36ef6bb67cfa)

These values are shown only once. Save them securely before closing the dialog.

Step 2.2: Grant Catalog Permissions

Your Service Principal needs catalog permissions to query your Databricks data.

In the Databricks sidebar, click Catalog and find your schema.

Open the Permissions tab and click Grant.

In the Principals box, enter your Service Principal name.

Select the Data Editor preset, or manually assign the following:

USE SCHEMAEXECUTEREAD VOLUMESELECTUSE CATALOG

You should enable Change Data Field (CDF) for the table you share with Insider One to ensure integration works. You can use the following command to execute.

Enable CDF when creating a table:

CREATE TABLE my_table (

id INT,

name STRING,

age INT

)

USING DELTA

TBLPROPERTIES (delta.enableChangeDataFeed = true);Enable CDF for an existing table:

ALTER TABLE my_table

SET TBLPROPERTIES (delta.enableChangeDataFeed = true);Refer to Databricks’ documentation for further information.

Step 2.3: Grant SQL Warehouse Permissions

If your Service Principal doesn’t have access to compute resources, you’ll need to grant permissions on the SQL warehouse.

In the Databricks sidebar, click Compute, then go to the SQL Warehouses page.

Locate your existing warehouse (or create a new one if needed).

Open the ⋮ menu next to the warehouse name and select Permissions. (If you don’t see this option, contact your Databricks account admin.)

In the Permissions panel:

i. Enter your Service Principal name.

ii. Set the permission level to Can use.

iii. Click Add to save.

Step 2.4: Add Databricks Credentials in Insider One

Once your Service Principal is configured, copy the following details from your SQL Warehouse Connection Details page (see screenshot above) and paste them into the InOne panel:

Server Hostname

HTTP Path

Step 3: Verify Your Authentication

After entering all required fields in the InOne panel:

Click Verify Authentication to confirm the connection.

If the verification is successful, click Save.

You will then be redirected to the Match Screen to continue setup.

Step 4: Select your Table

Choose the catalog, schema, and table you want to sync with Insider One.

Keep in mind:

You can select only one table per integration. If you need more, create separate integrations.

The table must be a Delta table. Refer to Databricks’ guide for instructions on converting other tables to Delta.

The table must have Change Data Feed (CDF) enabled. Refer to the 5th Step of Step 2.2 to learn how to enable CDF.

Views can’t be selected; you must select the tables.

Step 5: Map your Data

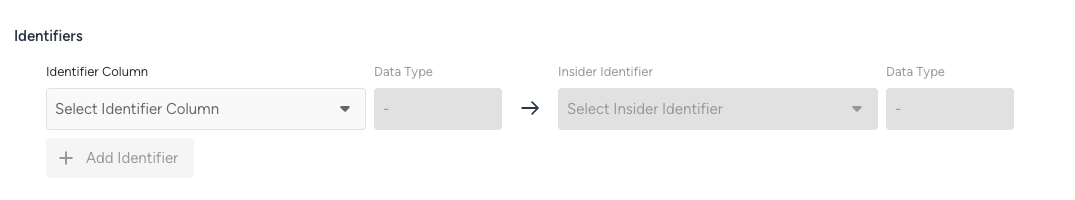

Map the column that represents the identifier to insert data into Insider One.

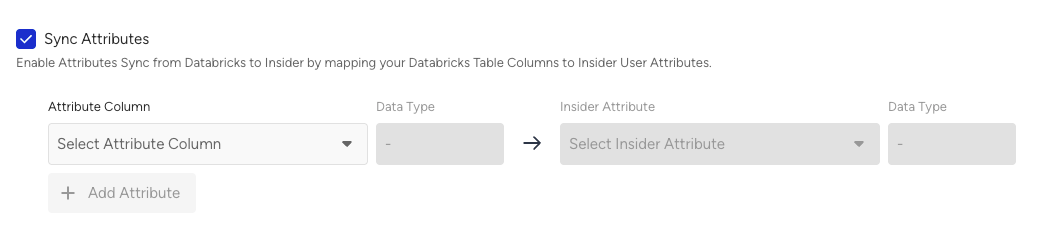

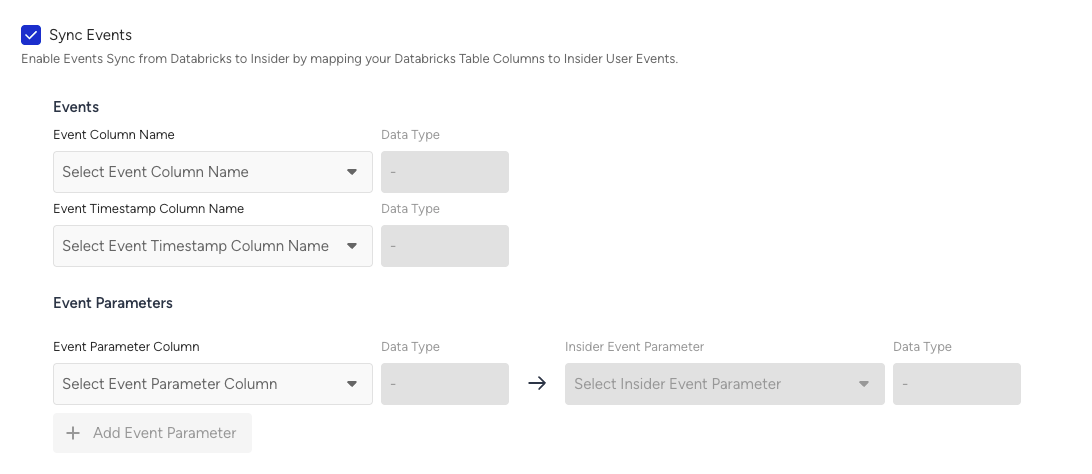

Turn on Sync Attributes and/or Events sections according to your data in your selected table to import.

To sync the attributes, select the column that represents attributes with your attributes in Insider One.

To sync the Events:

Select the column that represents the event name in your table.

Select the column that represents the event timestamp in your table.

Select the column that represents event parameters in your table.

Data Type Matching

Ensure that the data types in Databricks and Insider One are aligned. Use the table below to check the correct data type mappings.

Databricks Data Type | Insider One Data Type | Notes |

|---|---|---|

| String | Used for text attributes, identifiers, and event parameters. |

| DateTime | Insider One automatically converts any datetime format accepted by Databricks. RFC3339 format is recommended for consistency. |

| Number | Used for numerical attributes (e.g., a_age, e_quantity, e_unit_price). |

| Boolean | Used for opt-in attributes (e.g., a_email_optin). |

| Array Strings Array Numbers | Used for properties like |

Default Attribute, Event & Parameter Data Mapping Table

Parameter | Type | Description | Data Type | Required |

|---|---|---|---|---|

Attribute | Attribute. User's email address, can be used as an identifier. | String | No | |

phone_number | Attribute | Attribute. User's phone number in E.164 format (e.g. +6598765432), can be used as an identifier. | String | No |

email_optin | Attribute | Attribute. User's permission for marketing emails: | Boolean | No |

gdpr_optin | Attribute | Attribute. User's permission for Insider One campaigns, data collection, and processing: | Boolean | No |

sms_optin | Attribute | Attribute. User's permission for SMS: | Boolean | No |

whatsapp_optin | Attribute | Attribute. User's permission for WhatsApp Message: | Boolean | No |

name | Attribute | Attribute. User's name. | String | No |

surname | Attribute | Attribute. User's surname. | String | No |

birthday | Attribute | Attribute. User's birthday in RFC 3339 format (e.g. 1993-03-12T00:00:00Z). | Date/Time | No |

gender | Attribute | Attribute. Gender of the user | String | No |

age | Attribute | Attribute. Age of the user | Number | No |

language | Attribute | Language information of the user | String | No |

country | Attribute | Attribute. The user's country information in ISO 3166-1 alpha-2 format. | String | No |

city | Attribute | Attribute. City information of the user. | String | No |

uuid | Attribute | Attribute. The user’s UUID can be used as an identifier. | String | No |

event_name | Event | Name of the event | String | Yes |

timestamp | Event Parameter | Event time | Datetime | Yes |

event_group_id | Event Parameter | Event group ID | String | No (Yes only when the event_name is purchase or cart_page_view) |

product_id | Event Parameter | Event parameter. Unique product ID. | String | No |

name | Event Parameter | Event parameter. Name of the product. | String | No |

taxonomy | Event Parameter | Event parameter. Category tree of the product. | Array | No |

currency | Event Parameter | Event parameter. Currency used for product pricing, in ISO 4217 format (e.g., USD). | String | No (Yes only when the event_name is purchase or cart_page_view) |

quantity | Event Parameter | Event parameter. Quantity of the product. | Integer | No (Yes, only when the event_name is purchase) |

unit_price | Event Parameter | Event parameter. Price of the product without any discount(s). | Float | No |

unit_sale_price | Event Parameter | Event parameter. Unit price of the product. | Float | No (Yes only when the event_name is purchase or cart_page_view) |

color | Event Parameter | Event parameter. Color of the product (selected by user). | String | No |

size | Event Parameter | Event parameter. Size of the product (selected by user). | String | No |

shipping_cost | Event Parameter | Event parameter. Shipping cost of the items in the basket. | String | No |

promotion_name | Event Parameter | Event parameter. Name of the promotion. | String | No |

promotion_discount | Event Parameter | Event parameter. Total amount of discount applied by promotions. | Float | No |

If you have Purchase Event in your Databricks table, please make sure to;

If in a basket, there are more than single item, make sure to add those as seperated rows into your Databricks table.

Include

e_guid(event_group_id) parameter for each purchase event. This is needed to connect different products to single cart

Refer to Events & Attributes for further information on all default events and attributes.

If your data type in Databricks is a

TIMESTAMPformat, the specific date format is not critical. Insider One automatically converts any datetime format that is accepted by Databricks.

Step 6: Launch your Integration

Select your Sync Frequency and launch your integration.

Limitations

Views are not supported; you can only use tables to map your data.

Each table must include an identifier.

Custom attributes or event parameters must be predefined in Insider One (via the Events & Attributes page).

For Purchase Events:

Add one row per product in a basket.

Include

e_guid(event_group_id) to link multiple rows to a single cart.

The synchronization process does not support

DELETEoperations. If a record is deleted from your Databricks table, the corresponding data will not be removed from Insider One on the next sync.

Data Import Logic

During the Databricks → Insider synchronization:

If any identifier coming from Databricks is invalid, the entire record is not sent.

If an attribute coming from Databricks is invalid, only that specific attribute is skipped.

If an event parameter coming from Databricks is invalid, the entire event is excluded from processing.

Frequently Asked Questions (FAQ)

Q: What permissions do I need in my Databricks account to set up the integration?

A: You need a Databricks account with account admin privileges to configure the necessary components like the Service Principal and permissions.

Q: Can I sync data from more than one Databricks table at a time?

A: No, you can only select one table per integration. If you need to sync data from multiple tables, you must create a separate integration for each one.

Q: What are the requirements for a Databricks table to be eligible for syncing?

A: The table must be a Delta table, and it must have the Change Data Feed (CDF) feature enabled.

Q: How often can I sync my data from Databricks to Insider One?

A: You can set the sync frequency to be either Daily (at a specific time you choose) or Hourly (at the beginning of every hour).

Q: What happens if I try to map a custom attribute that doesn't exist in Insider One?

A: The integration will fail for that attribute. All custom attributes and event parameters must be created in your Insider One account on the Events & Attributes page before you set up the mapping.

Q: What happens if I delete a record in my Databricks table? Will it be removed from Insider One?

A: No. The integration currently only processes additions and updates to records. If you delete a record in Databricks, the corresponding data will remain in Insider One and will not be removed during subsequent syncs.

Q: Which cloud platforms are supported?

A: Insider One Databricks connector supports AWS, Azure, and Google Cloud platforms.