Insider One’s auto machine learning platform, Delphi, offers you the best state of a model you can get with the data you are providing. You receive a model trained with your customers, featuring the best features suitable for your needs.

Insider One constantly monitors the model metrics, improves them, and ensures that segmentation gets the best model available.

Delphi provides an infrastructure for all machine learning tasks, such as training models, supporting the workflow, managing data, evaluating models, deploying models, making predictions, and monitoring predictions. Delphi aims to decouple feature engineering, model training, and inference tasks. This way, it can standardize the machine learning pipelines, reduce model training and inference costs, and accelerate prototyping and new model developments.

.webp)

The ML cycle begins with understanding business needs, followed by outlining the product and gathering data. ML cleans the data, processes raw data, computes labels and features, and prepares the data for training.

This guide covers the following concepts:

Labeling and Model Training

Labeling is periodically performed for all Predictive algorithms, which are built on logic tailored to each business problem. These labels are then saved to the Label store for later use. A new model is trained for each algorithm on a weekly basis. Labels and best features are combined within this job, and trained models are saved to the model store. These models are then evaluated according to the best metric suitable for that problem, and the best model of the last 30 days is selected for inference.

Segmentation and Inference

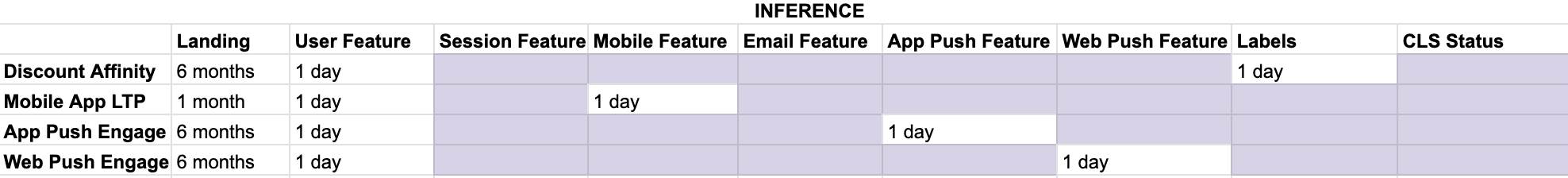

The Inference job feeds the most recent and your unlabeled data to Insider One’s models. As the output, Insider One gets user segments, namely Predictive audiences. For example, the Likelihood to Purchase model identifies two segments: those most likely to purchase at the time and those not.

Real-time Algorithms

Real-time algorithms are available for Likelihood to Purchase. The main difference between batch algorithms and real-time algorithms lies in the inference period. Batch models' inference jobs run daily and use user features. However, the real-time algorithms use session features and give real-time inferences.